Location: Williamsburg, VA Are you a trained oral historian looking for a welcoming community in which to learn and contribute your talents? The Special Collections Research Center, within the Earl Gregg Swem Library at William & Mary, is seeking a person to lead the oral history program at William & Mary Libraries. In this role, […]

Oral Historian at William & Mary

Oral Historian at William & Mary

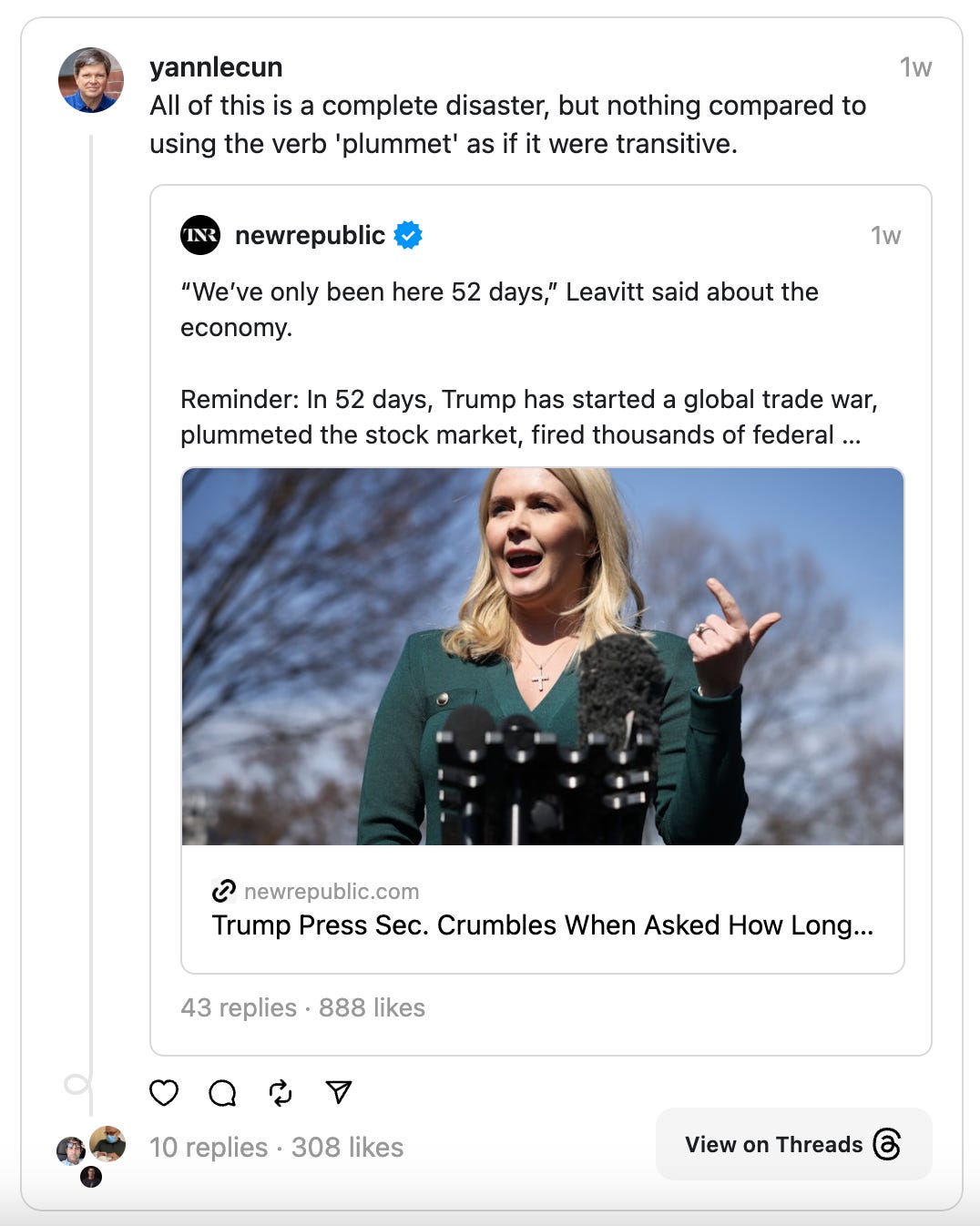

Plummet’s journey

https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F89002d4c-fab2-4a63-a9ea-db8617725bd1_1076x1346.png

A post by from the Language Log. Read this and other similar articles at www.languagelog.ldc.upenn.edu/nll/

Yann LeCun‘s evaluation of political versus linguistic errors:

His comment is no doubt meant as a joke, but it’s worth exploring the usage that bothers him.

To start with, the English word plummet has already been on a long morpho-syntactic and semantic journey (like nearly all other words). It started as a noun in Old French, plommet, the diminutive of plom “lead”, meaning “ball of lead, plumb bob“, referring to a (typically lead) weight attached to a “plumb line”. Wikipedia tells us that

The instrument has been used since at least the time of ancient Egypt to ensure that constructions are “plumb”, or vertical. It is also used in surveying, to establish the nadir (opposite of zenith) with respect to gravity of a point in space.

And also, used by sailors to estimate the depth of water.

The noun plummet, under various spellings, was borrowed into English as early the 14th century, and of course was also used metaphorically, as in this example from Shackerley Marmion’s 1632 play Hollands Leaguer:

And when I haue done, I’de faine see all your Artists,

Your Polititians with their Instruments

And Plummets of wit, sound the depth of mee.

And as always, the metaphorical extension got looser, to the point that the noun plummet came to be used to mean simply a “rapid fall” — though this usage seems to be relatively recent, with the OED’s first citation from 1957:

1957 After his plummet from fame, Keaton became a writer. Atchison (Kansas) Daily Globe

Also, like many other English nouns, plummet was soon used as a verb — though interestingly, the OED tells us that the first uses were transitive, connected to the depth-sounding sense of the noun:

1620 This ought to be the barre, cancell and limit of our too scrutinous nature, which often will assay to plummet the fathomlesse and bottomlesse sea of Gods most secret and hidden actions. T. Walkington, Rabboni

The (now more common) intransitive use, meaning (literally or figuratively) “To drop or fall rapidly or precipitously”, came a couple of hundred years later, with the OED’s first citation from 1845:

1845 Our capacity for delight plummeted. N. P. Willis, Dashes at Life with Free Pencil

OK, so what about the usage that bothered Yann LeCun:

In just 52 days, Trump has started a global trade war, plummeted the stock market, fired thousands of federal workers, slashed government funding, and sparked fears of a recession.

The author of that sentence has evolved plummet following the pattern of (the English version of) the causative-inchoative alternation:

The Causative/Inchoative alternation involves pairs of verbs, one of which is causative and the other non-causative syntactically and semantically (e.g., John broke the window vs. The window broke). In its causative use, an alternating verb is used transitively and understood as externally caused. When used non-causatively, the verb is intransitive and interpreted as spontaneous.

(Note that inchoative in this context means something like “change of state”, applied to the intransitive subject; and in the (transitive) causative version, the subject causes the object to the undergo the state change.)

There are many English verbs exhibiting this alternation — boil, melt, sink, open, bake, bounce, blacken, hang, close, cook, cool, dry, freeze, move, open, roll, rotate, spin, twist, shatter, thaw, thicken, whiten, widen, march, jump, …

And it’s common in English to extend this pattern to create a causative transitive verb from an intransitive inchoative one, as I did with evolve in an earlier sentence (though others have done this before me…).

But attempts at such extension don’t always go smoothly, and plummet is not the only example of possible failure. Thus fall is an intransitive inchoative verb, but “*Those actions are going to fall the market” doesn’t work. Why?

This post is already too long, so for now I’ll just direct you to Beth Levin and Malka Rapaport Hovav, “A preliminary analysis of causative verbs in English“, Lingua 1994:

This paper investigates the phenomena that come under the label ‘causative alternation’ in English, as illustrated in the transitive and intransitive sentence pair Antonia broke the vase / The vase broke. Central to our analysis is a distinction between verbs which are inherently monadic and verbs which are inherently dyadic. Given this distinction, much of the relevant data is explained by distinguishing two processes that give rise to causative alternation verbs. The first, and by far more pervasive process, forms lexical detransitive verbs from certain transitive verbs with a causative meaning. The second process, which is more restricted in its scope, results in the existence of causative transitive verbs related to some intransitive verbs. Finally, this study provides further insight into the semantic underpinnings of the Unaccusativity Hypothesis (Perlmutter 1978).

Among other things, they note the difference between “verbs of manner of motion such as roll, run, jog, and bounce“, which have causative counterparts, and “verbs of directed motion such as come, go, rise, and fall“, which don’t. You can read the paper to learn their theory of why this matters — but we can note that the intransitive verb plummet is arguably in between those categories, interpretable either way.

None of the dictionaries that I’ve checked have a causative-transitive sense for plummet = “cause to fall rapidly”, and I don’t think I’ve ever heard or read it — but it wouldn’t be a shock to find other examples, and it’s also understandable that it would trigger someone’s “wrong!” reaction.

AI ‘brain decoder’ can read a person’s thoughts with just a quick brain scan and almost no training

Scientists have made new improvements to a “brain decoder” that uses artificial intelligence (AI) to convert thoughts into text.

Their new converter algorithm can quickly train an existing decoder on another person’s brain, the team reported in a new study. The findings could one day support people with aphasia, a brain disorder that affects a person’s ability to communicate, the scientists said.

A brain decoder uses machine learning to translate a person’s thoughts into text, based on their brain’s responses to stories they’ve listened to. However, past iterations of the decoder required participants to listen to stories inside an MRI machine for many hours, and these decoders worked only for the individuals they were trained on.

“People with aphasia oftentimes have some trouble understanding language as well as producing language,” said study co-author Alexander Huth, a computational neuroscientist at the University of Texas at Austin (UT Austin). “So if that’s the case, then we might not be able to build models for their brain at all by watching how their brain responds to stories they listen to.”

In the new research, published Feb. 6 in the journal Current Biology, Huth and co-author Jerry Tang, a graduate student at UT Austin investigated how they might overcome this limitation. “In this study, we were asking, can we do things differently?” he said. “Can we essentially transfer a decoder that we built for one person’s brain to another person’s brain?”

The researchers first trained the brain decoder on a few reference participants the long way — by collecting functional MRI data while the participants listened to 10 hours of radio stories.

Then, they trained two converter algorithms on the reference participants and on a different set of “goal” participants: one using data collected while the participants spent 70 minutes listening to radio stories, and the other while they spent 70 minutes watching silent Pixar short films unrelated to the radio stories.

Using a technique called functional alignment, the team mapped out how the reference and goal participants’ brains responded to the same audio or film stories. They used that information to train the decoder to work with the goal participants’ brains, without needing to collect multiple hours of training data.

Next, the team tested the decoders using a short story that none of the participants had heard before. Although the decoder’s predictions were slightly more accurate for the original reference participants than for the ones who used the converters, the words it predicted from each participant’s brain scans were still semantically related to those used in the test story.

For example, a section of the test story included someone discussing a job they didn’t enjoy, saying “I’m a waitress at an ice cream parlor. So, um, that’s not … I don’t know where I want to be but I know it’s not that.” The decoder using the converter algorithm trained on film data predicted: “I was at a job I thought was boring. I had to take orders and I did not like them so I worked on them every day.” Not an exact match — the decoder doesn’t read out the exact sounds people heard, Huth said — but the ideas are related.

“The really surprising and cool thing was that we can do this even not using language data,” Huth told Live Science. “So we can have data that we collect just while somebody’s watching silent videos, and then we can use that to build this language decoder for their brain.”

Using the video-based converters to transfer existing decoders to people with aphasia may help them express their thoughts, the researchers said. It also reveals some overlap between the ways humans represent ideas from language and from visual narratives in the brain.

“This study suggests that there’s some semantic representation which does not care from which modality it comes,” Yukiyasu Kamitani, a computational neuroscientist at Kyoto University who was not involved in the study, told Live Science. In other words, it helps reveal how the brain represents certain concepts in the same way, even when they’re presented in different formats.

The team’s next steps are to test the converter on participants with aphasia and “build an interface that would help them generate language that they want to generate,” Huth said.

AI ‘brain decoder’ can read a person’s thoughts with just a quick brain scan and almost no training

Scientists have made new improvements to a “brain decoder” that uses artificial intelligence (AI) to convert thoughts into text.

Their new converter algorithm can quickly train an existing decoder on another person’s brain, the team reported in a new study. The findings could one day support people with aphasia, a brain disorder that affects a person’s ability to communicate, the scientists said.

A brain decoder uses machine learning to translate a person’s thoughts into text, based on their brain’s responses to stories they’ve listened to. However, past iterations of the decoder required participants to listen to stories inside an MRI machine for many hours, and these decoders worked only for the individuals they were trained on.

“People with aphasia oftentimes have some trouble understanding language as well as producing language,” said study co-author Alexander Huth, a computational neuroscientist at the University of Texas at Austin (UT Austin). “So if that’s the case, then we might not be able to build models for their brain at all by watching how their brain responds to stories they listen to.”

In the new research, published Feb. 6 in the journal Current Biology, Huth and co-author Jerry Tang, a graduate student at UT Austin investigated how they might overcome this limitation. “In this study, we were asking, can we do things differently?” he said. “Can we essentially transfer a decoder that we built for one person’s brain to another person’s brain?”

The researchers first trained the brain decoder on a few reference participants the long way — by collecting functional MRI data while the participants listened to 10 hours of radio stories.

Then, they trained two converter algorithms on the reference participants and on a different set of “goal” participants: one using data collected while the participants spent 70 minutes listening to radio stories, and the other while they spent 70 minutes watching silent Pixar short films unrelated to the radio stories.

Using a technique called functional alignment, the team mapped out how the reference and goal participants’ brains responded to the same audio or film stories. They used that information to train the decoder to work with the goal participants’ brains, without needing to collect multiple hours of training data.

Next, the team tested the decoders using a short story that none of the participants had heard before. Although the decoder’s predictions were slightly more accurate for the original reference participants than for the ones who used the converters, the words it predicted from each participant’s brain scans were still semantically related to those used in the test story.

For example, a section of the test story included someone discussing a job they didn’t enjoy, saying “I’m a waitress at an ice cream parlor. So, um, that’s not … I don’t know where I want to be but I know it’s not that.” The decoder using the converter algorithm trained on film data predicted: “I was at a job I thought was boring. I had to take orders and I did not like them so I worked on them every day.” Not an exact match — the decoder doesn’t read out the exact sounds people heard, Huth said — but the ideas are related.

“The really surprising and cool thing was that we can do this even not using language data,” Huth told Live Science. “So we can have data that we collect just while somebody’s watching silent videos, and then we can use that to build this language decoder for their brain.”

Using the video-based converters to transfer existing decoders to people with aphasia may help them express their thoughts, the researchers said. It also reveals some overlap between the ways humans represent ideas from language and from visual narratives in the brain.

“This study suggests that there’s some semantic representation which does not care from which modality it comes,” Yukiyasu Kamitani, a computational neuroscientist at Kyoto University who was not involved in the study, told Live Science. In other words, it helps reveal how the brain represents certain concepts in the same way, even when they’re presented in different formats.

The team’s next steps are to test the converter on participants with aphasia and “build an interface that would help them generate language that they want to generate,” Huth said.

Don’t Miss Out – Celebrate the First OHA Day!

Join us in supporting the Oral History Association by giving to the general endowment and sharing what OHA means to you! Your gift today will be DOUBLED! Thanks to a generous pledge from OHA Past Presidents and the History Task Force, every dollar you donate—up to $7,000—will be matched. Plus, OHA Council members have shown […]

Are you feeling irregular?

Q: I was surprised when autocorrect changed “intermittent” to “intermit.” I checked and, lo and behold, there is a word “intermit.” Does it not strike you as odd that the base-form is less known than its “built-up” version?

A: We don’t use, or recommend using, the autocorrect function in a word processor. Our spell-checkers flag possible misspellings but don’t automatically “fix” them. Word processors have dictionaries, but not common sense—at least not yet!

As for the words you’re asking about, the adjective “intermittent” (irregular or occurring at intervals) is indeed more common than the verb “intermit” (to suspend or stop). In fact, the verb barely registered when we compared the terms on Google’s Ngram Viewer.

However, “intermittent” isn’t derived from “intermit,” though both ultimately come from different forms of the Latin verb intermittere (to interrupt, leave a gap, suspend, or stop), according to the Oxford English Dictionary. The Latin verb combines inter (between) and mittere (to send, let go, put).

When “intermit” first appeared in English in the mid-16th century, it meant to interrupt someone or something, a sense the OED describes as obsolete.

The modern sense of the verb—“to leave off, give over, discontinue (an action, practice, etc.) for a time; to suspend”—showed up in the late 16th century.

It means “leave off” in the dictionary’s earliest citation for the modern usage: “Occasions of intermitting the writing of letters” (from A Panoplie of Epistles, 1576, by Abraham Fleming, an author, editor, and Anglican clergyman).

As we’ve said, “intermit” isn’t seen much nowadays. English speakers are more likely to use other verbs with similar senses, such as “cease,” “quit,” “stop,” “discontinue,” “interrupt,” or “suspend.”

When the adjective “intermittent” appeared in the early 17th century, Oxford says, it described a medical condition such as a pulse, fever, or cramp “coming at intervals; operating by fits and starts.”

The earliest OED citation is from an English translation of Plutarch’s Ἠθικά (Ethica, Ethics), commonly known by its Latin title Moralia (The Morals), a collection of essays and speeches originally published in Greek around the end of the first century:

“Beating within the arteries here and there disorderly, and now and then like intermittent pulses” (from The Philosophie, Commonly Called, The Morals, 1603, translated by Philemon Holland).

The adjective later took on several other technical senses involving irregular movement, but we’ll skip to its use in everyday English to mean occurring at irregular intervals. The earliest OED citation for this “general use” is expanded here:

“Northfleet a disunited Village of 3 Furlongs, with an intermittent Market on Tuesdays, from Easter till Whitsuntide only” (Britannia, or, An illustration of the Kingdom of England and Dominion of Wales, 1675, by the Scottish geographer John Ogilby).